Did Melania Trump plagiarise Michelle Obama’s speech?

On 2016, during the Republican National Convention, Melania Trump gave a speech to support Donald Trump campaign; as soon as the convention concluded, Twitter users noted similarities in some lines pronounced by Mrs Trump and a speech from Michelle Obama eight years ago on the Democratic National Convention; of course, Melania and her husband were criticised and the campaign team defended them, arguing the speech was written from notes and real life experiences.

How the Twitter’s users noted the similarities? On one side, some lines were exactly the same in both speeches, on the other hand, as said in this article from Usa Today:

It’s not entirely a verbatim match, but the two sections bear considerable similarity in wording, construction and themes.

If you were to automate the process to detect those similarities, what approach would you take? A first technique will be to compare both texts word by word but this will not scale well; you must consider the complexity of comparing all the possible sentences of consecutive words from a text against the other. Fortunately, NLP gives us a clever solution.

What are we going to do?

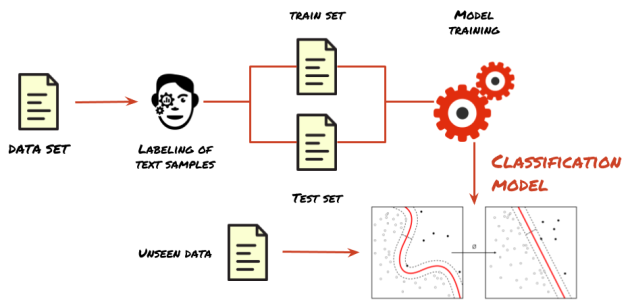

There is a core task for NLP called text similarity, which works solving the problem we stated: How do you compare texts without going on a naïve and inefficient approach? To do so, you need to transform the texts to a common representation and then you need to define a metric to compare them.

In the following sections you will see: the mathematical concepts behind the approach, the code example explained in detail so you may repeat the process by yourself and the answer to the original question: Did Melania plagiarise or not?

Text Similarity Concepts

TF-IDF

Straight to the point, the text is transformed to a vector. The words are then called features. Each position in the vector represents a feature and the value in the vector position depends on the method you use. One way to do it, is to count how many times the word appears in the text, divide it by the total count of terms in the document and assign this value to the vector for that feature, which is called Term Frequency or TF.

Term frequency alone may give relevance to common words present in the document, but they are not necessarily important, they may be stopwords. The stopwords are words that do not add meaning to a text, like articles, pronouns or modal verbs: I, you, the, that, would, could … and so on.

To know how important a word is in a particular document, Inverse document frequency or IDF is used. IDF seeks the relevance in the document by counting how many documents contain a term in the corpus.

In IDF, N represents the number of documents on the corpus, whilst dft represent the number of documents containing a term t. If all the documents in the corpus contain a term t, then N/dft will be equal to 1, and log(1) = 0, which means the term is not representative as, emphasising again, it appears in all documents.

Term frequency–inverse document frequency or TF-IDF combines the two previous metrics: if a word is present in a document, but also it’s in all the other documents of the corpus, it’s not a representative word and TF-IDF gives a low weight value. Conversely, if a word has high frequency by appearing many times in a document and it only appears in that document, then TF-IDF gives a high weight value.

The TF-IDF values are calculated for each feature (word) and assigned to the vector.

Cosine Similarity

Having the texts in the vector representation, it’s time to compare them, so how do you compare vectors?

It’s easy to model text to vectors in Python, lets see an example:

from sklearn.feature_extraction.text import TfidfVectorizer phrase_one = 'This is Sparta' phrase_two = 'This is New York' vectorizer = TfidfVectorizer () X = vectorizer.fit_transform([phrase_one,phrase_two]) vectorizer.get_feature_names() ['is', 'new', 'sparta', 'this', 'york'] X.toarray() array([[0.50154891, 0. , 0.70490949, 0.50154891, 0. ], [0.40993715, 0.57615236, 0. , 0.40993715, 0.57615236]])

This code snippet shows two texts, “This is Sparta” and “This is New York“. Our vocabulary has five words: “This“, “is“, “Sparta“, “New” and “York“.

The vectorizer.get_feature_names() line shows the vocabulary. The X.toarray() shows both texts as vectors, with the TF-IDF value for each feature. Note how for the first vector, the second and fifth position have a value of zero, those positions correspond to the words “new” and “york” which are not in the first text. In the same way, the third position for the second vector is zero; that position correspond to “sparta” which is not present in the second text. But how do you compare the two vectors?

By using the dot product it’s possible to find the angle between vectors, this is the concept of cosine similarity. Having the texts as vectors and calculating the angle between them, it’s possible to measure how close are those vectors, hence, how similar the texts are. An angle of zero means the text are exactly equal. As you remember from your high school classes, the cosine of zero is 1.

Finding the similarity between texts with Python

First, we load the NLTK and Sklearn packages, lets define a list with the punctuation symbols that will be removed from the text, also a list of english stopwords.

from string import punctuation

from nltk.corpus import stopwords

from sklearn.metrics.pairwise import cosine_similarity

from sklearn.feature_extraction.text import TfidfVectorizer

language_stopwords = stopwords.words('english')

non_words = list(punctuation)

Lets define three functions, one to remove the stopwords from the text, one to remove punctuation and the last one which receives a filename as parameter, read the file, pass all the string to lowercase and calls the other two functions to return a preprocessed string.

def remove_stop_words(dirty_text):

cleaned_text = ''

for word in dirty_text.split():

if word in language_stopwords or word in non_words:

continue

else:

cleaned_text += word + ' '

return cleaned_text

def remove_punctuation(dirty_string):

for word in non_words:

dirty_string = dirty_string.replace(word, '')

return dirty_string

def process_file(file_name):

file_content = open(file_name, "r").read()

# All to lower case

file_content = file_content.lower()

# Remove punctuation and spanish stopwords

file_content = remove_punctuation(file_content)

file_content = remove_stop_words(file_content)

return file_content

Now, lets call the process_file function to load the files with the text you want to compare. For my example, I’m using the content of three of my previous blog entries.

nlp_article = process_file("nlp.txt")

sentiment_analysis_article = process_file("sentiment_analysis.txt")

java_certification_article = process_file("java_cert.txt")

Once you have the preprocessed text, it’s time to do the data science magic, we will use TF-IDF to convert a text to a vector representation, and cosine similarity to compare these vectors.

#TF-IDF vectorizer = TfidfVectorizer () X = vectorizer.fit_transform([nlp_article,sentiment_analysis_article,java_certification_article]) similarity_matrix = cosine_similarity(X,X)

The output of the similarity matrix is:

[[1. 0.217227 0.05744137] [0.217227 1. 0.04773379] [0.05744137 0.04773379 1. ]]

First, note the diagonal with ‘1‘, this is the similarity of each document with itself, the value 0.217227 is the similarity between the NLP and the Sentiment Analysis posts. The value 0.05744137 is the similarity between NLP and Java certification posts. Finally the value 0.04773379 represents the similarity between the Sentiment Analysis and the Java certification posts. As the NLP and the sentiment analysis posts have related topics, its similarity is greater than the one they hold with the Java certification post.

Similarity between Melania Trump and Michelle Obama speeches

With the same tools, you could calculate the similarity between both speeches. I took the texts from this article, and ran the same script. This is the similarity matrix output:

[[1. 0.29814417] [0.29814417 1. ]]

If you skipped the technical explanation and jumped directly here to know the result, let me give you a resume: using an NLP technique I estimated the similarity of two blog post with common topics written by me. Then, using the same method I estimated the similarity between the Melania and Michelle speeches.

Now, lets make some analysis here. By calculating the similarity, between two blog posts written by the same author (me), about related topics (NLP and Sentiment Analysis), the result was 0.217227. The similarity between Melania and Michelle speeches was 0.29814417. Which in conclusion, means, that two speeches from two different persons belonging to opposite political parties, are more similar, than two blog posts for related topics and from the same author. I let the final conclusion to you. The full code, and the text files are on my Github repo.

Happy analysis!